AI, LLM 5 min read

AI without the hype: Getting true value from LLMs without getting distracted

At Taktile, we have significant experience in weaving ML and AI into risk systems. My co-founder Maik Taro Wehmeyer and I spent most of our working lives revamping how banks and insurance companies run their automated decisions. The first investor in Taktile was one of the inventors of DeepMind’s AlphaFold. And given that our customer base includes some of the most forward-thinking financial institutions globally, we witness how finance benefits from adopting AI every day. Needless to say, we are extremely excited about AI’s potential to improve how risk teams make critical decisions at scale.

Truly getting value out of AI systems is notoriously difficult and, sadly, vendors’ tendency to overpromise and underdeliver is not helping: Earlier this year, a company published an impressive video demo of their “AI Software Engineer”, which led to a frenzy of excitement in the community and a surging valuation. Later on, it turned out that the impressive claims were at best misleading. This is just one example of so-called “AI washing”. The practice has become so prevalent that the Securities and Exchange Commission even issued an investor alert warning investors of false claims about AI capabilities. We wrote this guide to help risk teams see through stage-rigged demos, avoid falling for charlatans, and focus on what moves the needle instead.

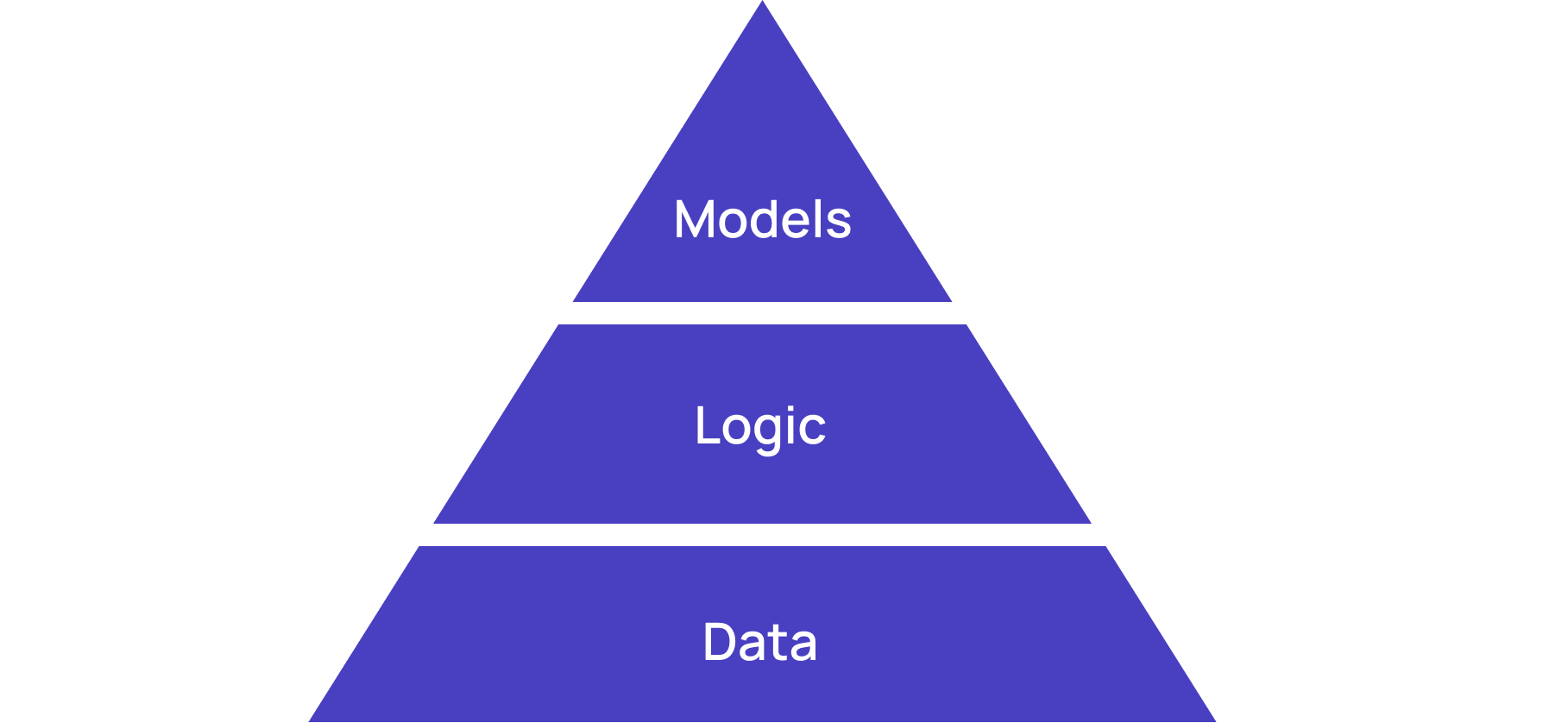

The pyramid of good decisions

Data, logic, and models are the three core ingredients for good decisions.

Data

High-quality data is the foundation of every good decision. Without trusted signals, it doesn't matter what is layered on top — any conclusion will be unreliable. Therefore, investing in data foundations, be it through adding external data sources or by improving how internal data is generated, refined, and monitored, is almost always a highly impactful activity. Especially when you want to feed this data into a downstream model.

Logic

At Taktile, we are convinced that the logic layer for decision systems is here to stay and that both models and logic will coexist to balance out each others’ strengths and weaknesses. By implication, more models will lead to more logic rather than less because logic is great for expressing strategic intent, domain expertise, and regulatory constraints. For example, your credit policy will contain limits set by funding partners. Your pricing policy will be constrained by competitors as well as the legal environment. And an important factor in your decisions will be your credit team’s forward-looking judgment. None of those can be learned from historical data, nor should they be.

"We heavily rely on machine learning models for credit scoring. However, we always combine these models with carefully crafted credit logic to achieve our business goals. At Branch, the ML team and the credit team work hand in hand."

Models

Models come in a wide variety of shapes and forms. They come in the form of scores purchased from fraud vendors or credit bureaus. They come in the form of self-managed statistical or machine learning model predictions, for example from logistic regressions or boosted trees. And they increasingly come in the form of text, code, and data returned by large language models (LLMs).

Models are great when the future is likely to be similar to the past. For example, if you have collected significant data points on fraudulent behavior, you can use a model to accurately capture this pattern and flag it. This will work well as long as the pattern is stable and the findings generalize.

The reason for presenting the ingredients as a pyramid is based on our experience with hundreds of model deployments. Focusing purely on model development is usually ineffective. Most often, the biggest levers for improving decision quality lie in improving the underlying data and truly thinking through how model scores map to business outcomes via the logic layer. That is why we built Taktile with those three ingredients in mind: a deep Data Marketplace, an intuitive and transparent logic layer, and the ability to infuse decisions with cutting edge models.

Thinking through AI adoption

The Pyramid of Good Decisions shapes our views and approach to AI, which can help at every step along the decision creation and improvement journey.

How AI affects the data layer

AI is great for turning unstructured data into structured data that can then be fed into models and scorecards. In our product documentation, for example, we show you how to use the OpenAI integration to turn business descriptions into NAICS codes for industry classification. This example illustrates how an irregular text-based input can be turned into something that you can systematically use to apply your own company’s industry restrictions.

AI can also help present complex information in a more natural way. For example, we have successfully used AI to explain in human-readable language why a machine learning model flagged a transaction for manual review to improve review quality and save time.

Finally, AI can help find patterns where other techniques fall short. For example, we have found that pre-trained (and fine-tuned) models are better at classifying bank transactions than models trained from scratch, likely because of the knowledge about the world that is encoded in their weights. Better classification in turn helps improve credit underwriting decisions that rely on transactional signals.

How AI affects the logic layer

It might be counterintuitive to think about AI when talking about logic, but we have found that AI is very helpful in building out and improving decision logic. Imagine a co-pilot that identifies bottlenecks in the decision process where many applicants are rejected, then recommends data sources to help reduce false positives, and thereby lets you raise approval rates. Most importantly, we believe AI can make decision authors’ lives easier without taking away control and transparency over how decisions are ultimately made.

There are many pieces in the logic layer that AI can help with. We have discovered that AI is very good at defining test cases, helping you to find gaps in your logic that you might have otherwise missed. Similarly, AI can help you resolve unexpected errors when handling edge cases, or write code for you to capture complex business logic that is difficult to express with low-code building blocks only.

Another strength of AI is summarization. For example, it can explain logic that others wrote, summarize changes in a human-readable way, and annotate decision graphs with headlines and descriptions.

How AI affects the model layer

Most importantly, AI models are themselves useful as part of a decision, for example to classify a transaction, reason about textual input, or to draft a customized email for a customer that can be reviewed and sent by a human in seconds. As a decision platform, we make it easy to try out new models, see how they perform, and evaluate them in their decision context. Given the rapid progress in AI models, it is important to maintain a layer on top of models that is agnostic to the underlying model. Otherwise, you are wedded to a specific provider’s model that might be obsolete within a few months.

In addition, AI can also help train smaller, whitebox models for use in decision-making. In consumer credit, the Fair Credit Reporting Act requires giving precise explanations for denying credit to an applicant. The Consumer Financial Protection Bureau made clear that this requirement will be upheld in the age of AI. Therefore, lenders often choose either models that are explainable by design or use post-decision algorithms to explain model output. In both scenarios, AI assistance can help train, evaluate, and monitor such models.

Guardrails

Financial services have much higher standards for data security, privacy, and governance than most other industries — and rightly so. This creates pitfalls when rushing to adopt AI.

For example, most providers of hosted models reserve the right to review the data that flows through their systems. This might not matter for some use cases, such as when dealing with publicly available company information. However, it becomes problematic when dealing with sensitive consumer data such as credit reports, which most hosted model providers are inadequately prepared for. Simply wrapping ChatGPT does not cut it.

Similarly, critical decisions require a high level of reproducibility. Our customers expect to be able to explain decisions to customers, auditors, and regulators even years later. This requires strict versioning and signoff procedures of models, prompts, and input data. Therefore, AI adoption will only be possible with a comprehensive layer of decision management infrastructure around it.

Taktile’s roadmap

At Taktile, we have a full pipeline of AI features that align with our vision of helping financial institutions improve how they make critical decisions at scale. In doing so, we will focus on value rather than hype, and we will honor the strict guardrails and high standards that our customers are expecting from us.

A number of people have provided helpful comments on earlier drafts of this post. In particular, I would like to thank Peter Tegelaar for a discussion on how they combine traditional ideas with LLMs at Stenn. I would also like to thank Matt Mollison for suggesting to emphasize the business dimension in the logic layer.

Additional reading

- Money20/20 Vegas Panel Recap: ChatGPT can write, but can AI underwrite?

- “Towards Generative Credit” by Matt Mollison and Matt Flannery (Branch)

- Cash flow based underwriting enhanced by ClassifAI by Silvr

Frequently Asked Questions (FAQs)

Q: How can financial institutions get true value from AI and LLMs?

A: Institutions can unlock value by combining AI models with high-quality data and decision logic. Large language models (LLMs) can structure unstructured data, enhance risk scoring, and support credit and fraud decisions while staying compliant with regulatory requirements.

Q: What is the pyramid of good decisions in risk management?

A: The pyramid emphasizes Data, Logic, and Models as foundational layers for effective financial decisions. High-quality data, clear decision logic, and AI or ML models together improve accuracy, transparency, and regulatory compliance in credit, underwriting, and fraud management.

Q: How does AI enhance decision logic for lenders?

A: AI can flag gaps in decision rules and summarize and annotate complex logic, helping teams maintain control, transparency, and operational efficiency.

Q: What are the risks of adopting AI without proper guardrails?

A: Without proper infrastructure, financial institutions risk data privacy breaches, regulatory non-compliance, and unreproducible decisions. Guardrails like model versioning, strict signoff procedures, and secure data management are essential for safe AI adoption.

Q: How can AI models be applied safely in lending and risk systems?

A: AI models can classify transactions, reason over textual inputs, or generate decision recommendations. Coupled with explainable or post-decision models, AI supports compliant credit decisions, fraud detection, and customer engagement while maintaining transparency and auditability. See AI in action on Taktile’s Decision Platform.